Do AI and Human Experts See Pneumonia the Same Way?

Short answer: No.

In our latest study, Giacomo Cirò and Davide Beltrame explore whether deep learning models use the same visual cues as medical professionals when diagnosing pneumonia in pediatric chest X-rays.

They trained four CNNs (AlexNet, VGG-16, ResNet-50, InceptionNet-V1) on 5,000+ labeled X-rays achieving over 90% classification accuracy.

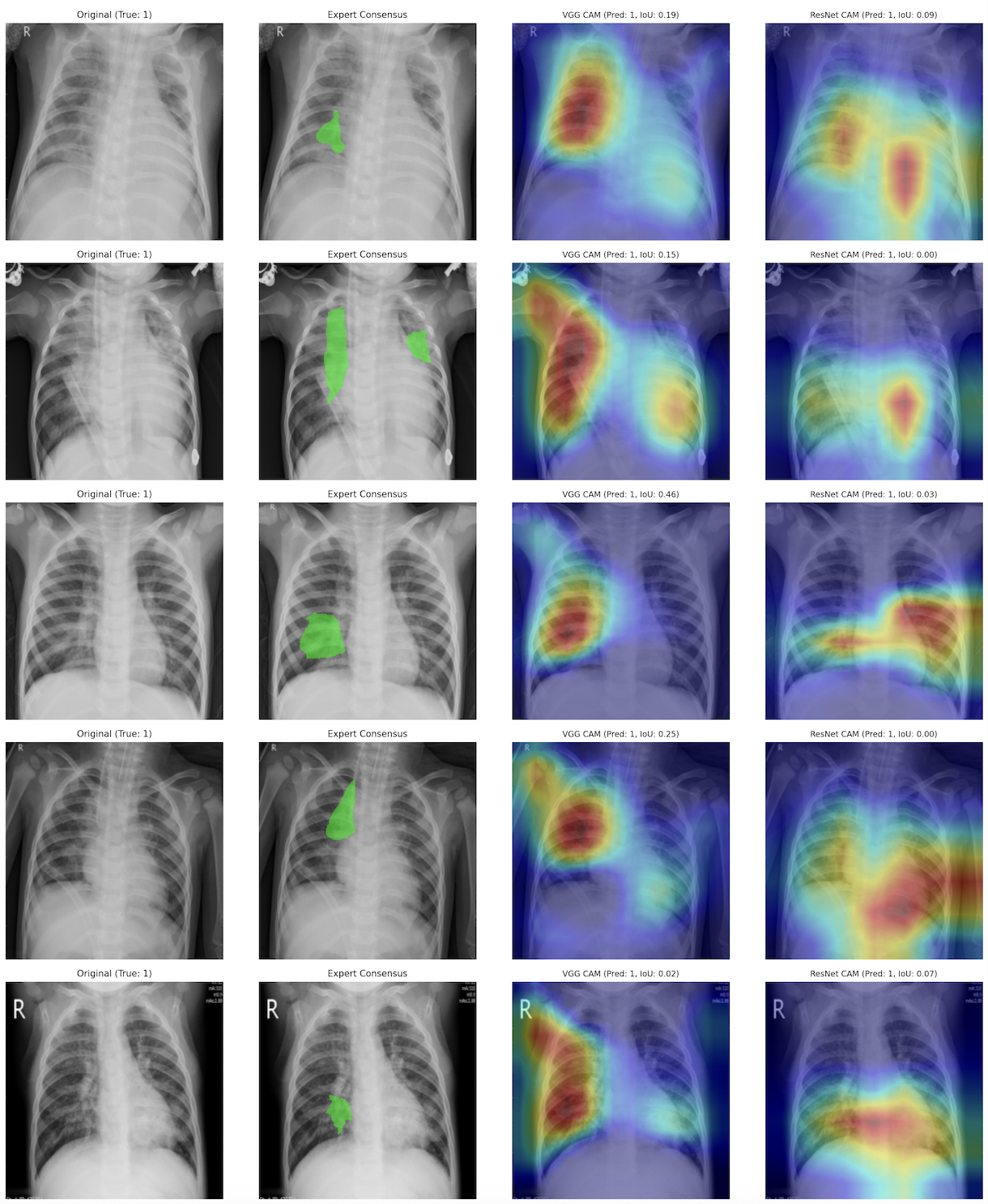

To assess interpretability, they compared model saliency maps with 300+ expert annotations (from 14 medical students and doctors) on 50 test images using Intersection over Union and the Pointing Game.

Key insight: High accuracy does not mean expert-aligned reasoning.

- The most accurate model didn’t align best with expert annotations

- One model with solid accuracy showed random-level alignment

This highlights a critical point: achieving trustworthy AI in medical imaging means explicitly optimizing for human-aligned explanations, not just maximizing classification accuracy.